Beginnners guide to what is AI

Brief summery of AI (History | Uses | Resources)

- Artificial Intelligence

- Machine Learning in Depth

- Limitations of Machine Learning

- Overfitting

- Transfer Learning & Its applications

- Keywords, Notations, & Few Jargons

- Useful links

Artificial Intelligence

It is a form of intelligence shown by computers, unlike humans. It is created by both Machine Learning and Rule based approaches.

Applications:

-

Robotics:

- Boston Dynamics Spot Robot uses onboard AI to avoid obstacles and generate shortest path to a location

-

Playing games:

- DeepMind uses AI to beat world champions in Go (Alpha Go), Chess (Alpha Zero) and even in all 57 Atari games (Agent 57)

- OpenAI Five has won 99.4% of matches in Dota 2 within 3 days using Reinforcement Learning

-

Computer Vision:

- Tesla Autopilot has achieved full self driving capabilities with just few cameras and sensors

-

Biology:

- Alpha Fold 2 can generate protein structures with more than 90% accuracy

-

Natural Language Processing (NLP):

- Google translate is a highly complex AI model which can translate any language

Machine Learning in Depth

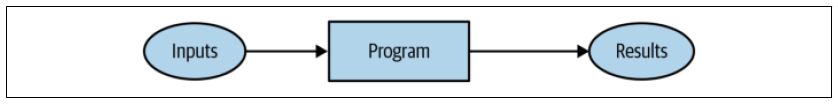

Rule based: A particular problem is solved with set of rules.

Machine Learning (ML): It is a way of teaching machines to solve problem using data, rather than doing explicit coding for each situation.

Types of Machine Learning:

- Supervised Learning - consumes data with proper labels

- Semi-supervised Learning - requires partial data

- Unsupervised Learning - works by clustering similar data

- Reinforcement Learning - based on environments, policies, rewards

Types of Algorithms in Machine Learning:

- Classification - classes

- Regression - range

- Clustering - groups

- Sequence Prediction - recommendations

- Style Transfer - filters/translators

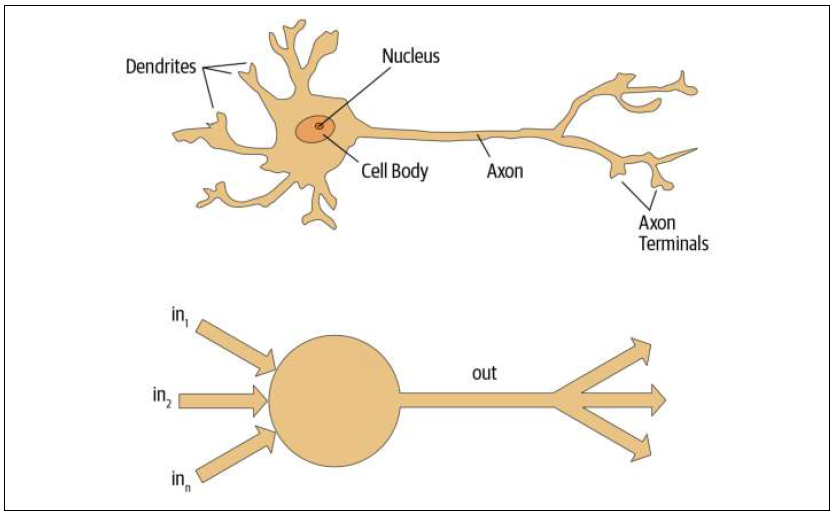

Deep Learning (DL): It is a part of Machine Learning which tackles any problem (in theory) using interconnected nodes (neurons / weights & biases). It is highly flexible, adaptable and even mimics human brain.

Neural Networks (NN): It is a form of arranging artificial nodes to emulate human neurons. Here artificial neurons are nothing but mathematical values manipulated by doing few transformations and dot products.

History of ML

- AI winter: It is period of time where AI was has having a lot of attention but no one ever took it seriously (implementation).

- Big Data: In 80’s and 90’s data was produced but due to limited computational power and low quality of data, AI never took off.

- Technology: Now we have very fast accessible computational power, good quality big data, and improved algorithms.

Parallel Distributed Processing (PDP)

- A set of processing units

- A state of activation

- An output function for each unit

- A pattern of connectivity among units

- A propagation rule for propagating patterns of activities through the network of connectivities

- An activation rule for combining the inputs impinging on a unit with the current state of that unit to produce an output for the unit

- A learning rule whereby patterns of connectivity are modified by experience

- An environment within which the system must operate

Limitations of Machine Learning

- Model requires some amount of good data

- Data collected should also contain labels

- Model learns only patterns which exist in the dataset

- Model only predicts outcomes of the classes, not recommended actions.

- Feedback loops: Few models are biased towards a region of the dataset, when the model retrained on the new data, It tends to go in a loop where model gets more and more biased

Overfitting

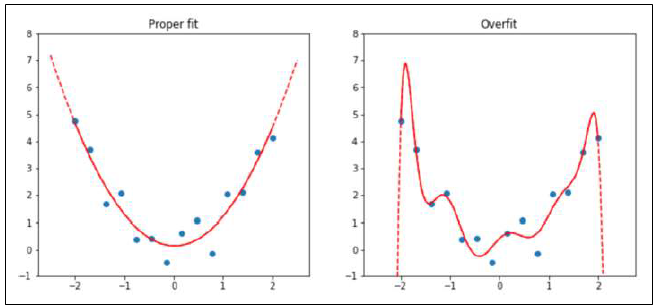

It is a major problem while creating an ML/DL model. Often times, model tends to memorize the input data which later leads to overfitting.

Overfitting is a situation when a model can only predict trained dataset accurately compare to raw data (real world data/testing dataset)

As you can see from above picture, proper fit can give less variance compare to the overfit model.

Variance → average error over set of experiments (better solution if variance is less)

We can avoid these issues by constantly evaluating the model with the validation dataset and stop the training if it shows steady decrease over epochs on validation accuracy. If it happens then try to randomize or improve or generate better data.

Transfer Learning & Its applications

Transfer Learning: It is a process of reusing the pretrained model to achieve an new objective in much faster rate and with better accuracy.

Applications: (below examples used computer vision models)

-

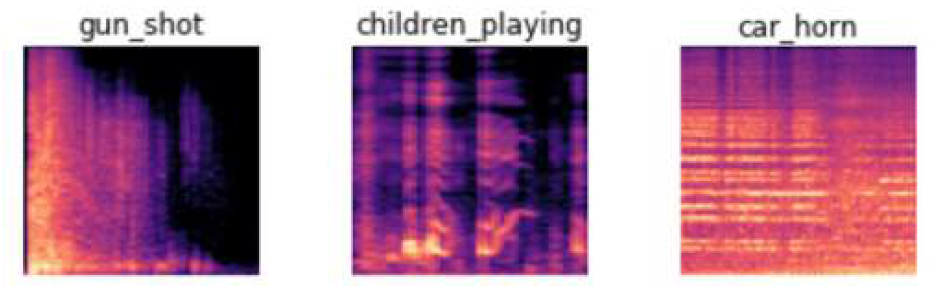

Sound recognition is done by creating spectrograms

-

Fraud detection by converting mouse behavior to an image

-

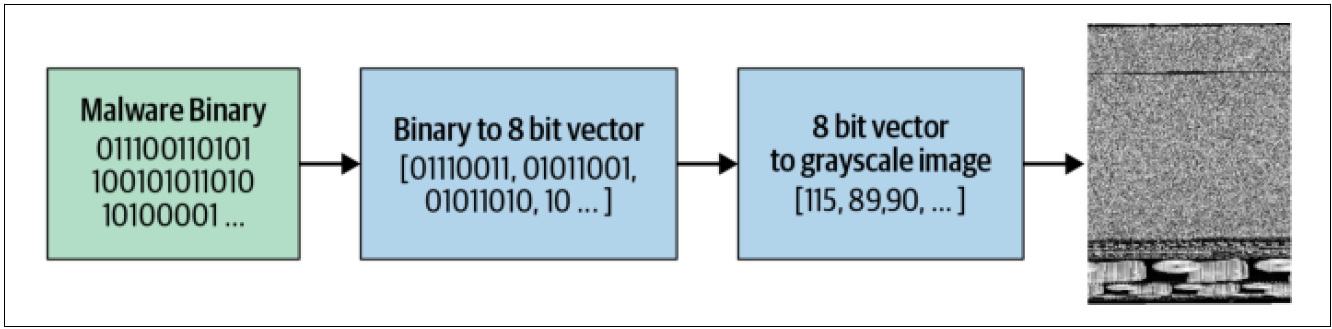

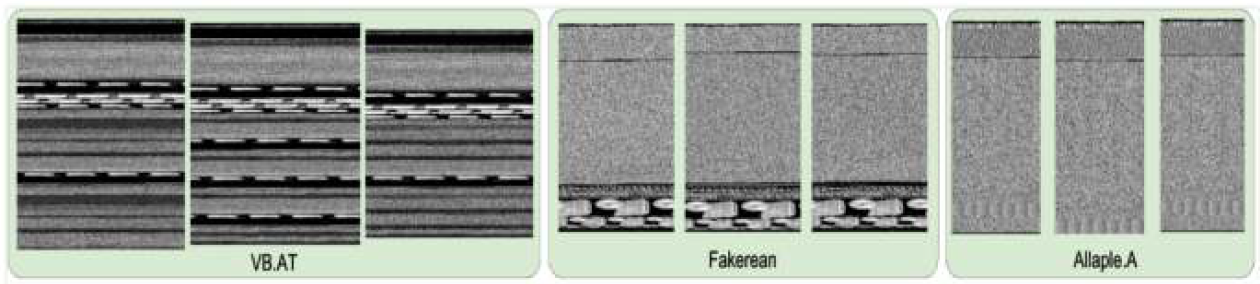

Malware classification with Deep Convolutional Neural Networks Process:

Few examples:

Keywords, Notations, & Few Jargons

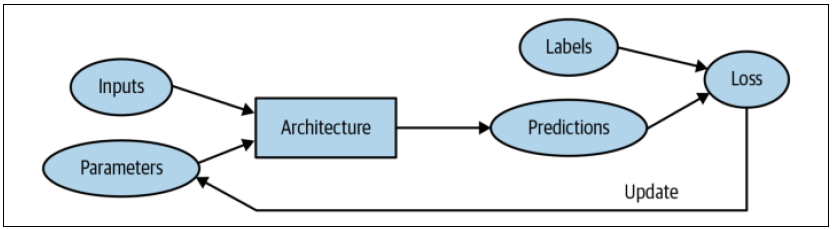

- Architecture- structure of model (Layer arrangments and number of neurons in each layer or simple outline of the model or internal structure)

- Parameters- Other name for weights (artificial neurons)

- Loss- way of finding the negative performance

- Independent variables- Input to the model (e.g. flatten layer of images)

- Targets- dataset labels

- Predictions- After model training, the final output after passing the inputs.

- Labels- the data we want to predict

- Epochs- number of times model needs to train

- Fit- function to start training the model with input data and labels

- Loss- A measure of how good model is, chosen to drive training via SGD

- Metric- A measure for humans to come to conclusion on how well model is performing on testing dataset

- CNN- Convolutional Neural Networks are similar to original NN but has filters/convolutions in the architecture

- Validation set- Part of dataset which is kept aside for evaluating model before doing final evaluations on testing set.

Useful links

- Practical Deep Learning : https://course.fast.ai/

- Tutorials of ML : https://machinelearningmastery.com/

- Quality ML Information : https://www.youtube.com/c/ArxivInsights

- Latest ML News : https://www.youtube.com/c/YannicKilcher

Liked the post? Share it on social media!